在Metaverse世界中,每个人和物都将拥有虚拟角色。他们在其中能得到与现实生活相似乃至超越现实的身心体验,例如玩游戏、看电影、艺术创作,和购物。

In the Metaverse, everyone and everything will get a virtual representation. Players can live experiences similar to or even beyond real life such as playing games, going to the movies, create or shop.

与当下互联网社交头像有所不同,虚拟角色能够帮助人类个体在虚拟世界实现独一无二的属性。通过实现脸部五官、情绪表情、手势姿态变化,从而提升互动感和真实感。

Unlike the current Internet social avatars, virtual avatars determine the uniqueness of individual humans in the virtual world. Through the realization of facial features, emotional expressions, gestures and posture changes, so as to enhance the sense of interaction and realism.

换句话说,虚拟角色是人类通往虚拟世界的通行证,是人类在虚拟世界的身份标识。

In other words, the virtual avatar is the passport of mankind to the virtual world and the identity of mankind in the virtual world.

很明显,定制化游戏角色和用户原创内容是元宇宙的重要支柱,不过创作虚拟角色是一个复杂的过程,也会产生诸多需要攻克的难题。

Obviously, customized game characters and user-generated content are important pillars of Metaverse. However, creating virtual characters is a complex process that includes a lot of challenges to overcome.

育碧(Ubisoft)是研发、发行与销售互动式娱乐游戏与服务的领先企业。自1996 年在中国建立工作室以来,育碧一直站在中国游戏产业的前沿。

Ubisoft is a leader in the development, distribution and sales of interactive entertainment games and services. Since establishing a studio in China in 1996, Ubisoft has been at the forefront of China's game industry.

目前,育碧中国在上海和成都拥有两处工作室,1000多名来自国内外游戏制作、图像设计、动画、程序、人工智能、音效、测试及数据管理方面的专业人才。

And today, Ubisoft China has two studios in Shanghai and Chengdu, with more than 1,000 professionals in game production, image design, animation, programming, artificial intelligence, sound effects, testing and data management at home and abroad.

在构建虚拟化身方面,育碧进行了长时间的探索,并创建了一套行业领先的技术体系。

In terms of building virtual avatars, Ubisoft has been exploring for a long time and has come up with a set of industry-leading technology systems.

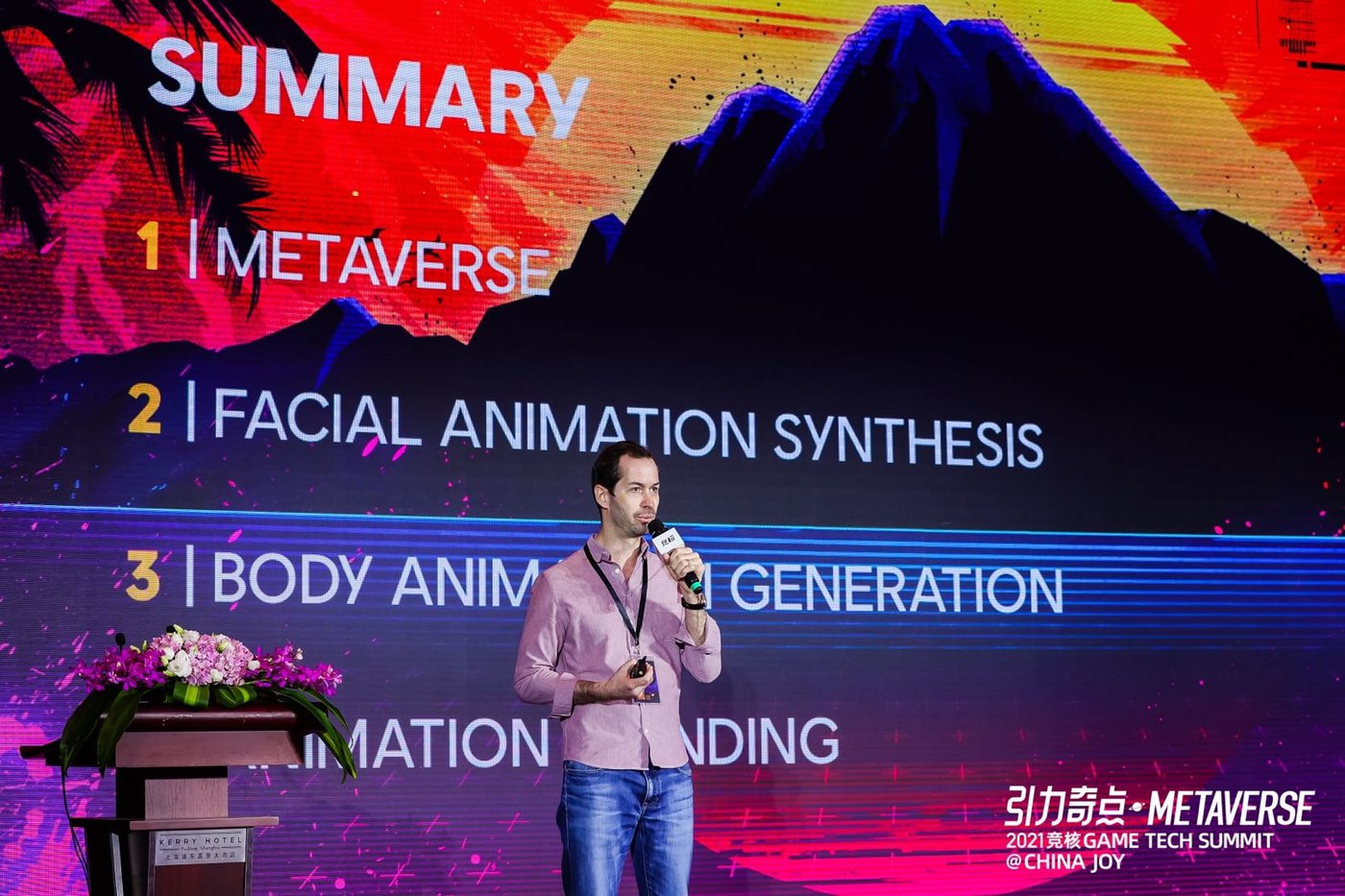

在引力奇点·Metaverse峰会中,育碧中国AI&数据实验室总监Alexis Rolland,介绍了育碧对于Metaverse的整体看法,以及他们在创建虚拟化身过程中所遇到的挑战和解决方案。

At the Gravity Singularity Metaverse Summit, Alexis Rolland, Director of Ubisoft China AI & Data Lab, introduced Ubisoft’s definition of the Metaverse, as well as the challenges and solutions they developed in the process of creating virtual avatars.

以下是育碧中国AI&数据实验室总监Alexis Rolland演讲实录,略经编辑:

The following is the transcript of the speech by Alexis Rolland, Director of Ubisoft China’s AI & Data Lab, with some editing:

首先,很荣幸来到这里。我的名字叫Alexis Rolland,是育碧中国AI&数据实验室总监。育碧上海和成都两家工作室都有我们这个技术团队的成员。我们运用机器学习,研发工具为游戏研发赋能。

My name is Alexis Rolland. Thank you for the introduction. I am the director of Ubisoft China’s AI laboratory. We are a technology team located in Shanghai and Chengdu, we do R&D and develop tools to empower game production teams with Machine Learning.

我们制作了许多著名的游戏项目,包括《彩虹六号:围攻》、《刺客信条》、《孤岛惊魂》、《舞力全开》等等。这个月,我们在中国发布了新款游戏《疯狂兔子》。

我估计大家都知道育碧,至少听说过。我们是一家游戏大厂,大家一起来看下宣传视频来了解一下。

I assume most of you know about Ubisoft, at least I hope you heard about us. We are a major video game developer, but just in case, I prepared a short video to remind you what are the games we're making.

育碧于是最早来中国投资的外资游戏厂商之一,1996年,成立了上海工作室。2008年,育碧在中国的第二家工作室在成都成立。

Ubisoft was actually one of the first video game developer to enter China as early as 1996. When we opened our first studio in Shanghai. Later on, we opened the second studio in Chengdu in 2008.

如今,两家工作室拥有超过1000多名员工,在全球论及研发团队规模,育碧中国是第三大的。我们参与了知名IP的研发,《彩虹六号:围攻》、《刺客信条》、《孤岛惊魂》、《舞力全开》,以及这个月要在中国发新作的《疯狂兔子》

And today, both studios combined include more than 1,000 employees, which makes it the third biggest, creative force of the company. We work on its most famous franchises, including Rainbow Six: Siege, Assassins Creed, Far Cry, Just Dance, and Rabbids for which we are actually releasing a new game in China this month.

在今天的演讲中,我将主要讲述四个方面的内容。首先,我想再介绍一下Metaverse,或者说是我们育碧对Metaverse的理解。然后,我将和大家探讨和创建虚拟角色有关的三个常见的挑战,面部动画生成、动作动画生成和动画合成。

In today's presentation will cover four parts. Yet, again, an introduction about the Metaverse or at least how we define it in Ubisoft. Then I will address three common challenges related to the creation of virtual avatars. In particular facial animation, body animation and animation blending.

那么首先,让我们讲讲Metaverse。

在育碧,我们把Metaverse定义为与现实世界平行的强化虚拟世界,在这个世界中玩家能够以虚拟化身的形象做他们在现实生活中可以做的几乎所有事情。这包括玩游戏、听音乐会、看电影,艺术创作或购物。

But let's start with the Metaverse

We define the Metaverse as enhanced virtual world parallel to the real world, where players can use a personalized avatar and do almost everything they could do in real life. That includes playing video games, but also going to concert or movies and even create or shop.

我们认为Metaverse的建立和存在依赖于六大基础要素。

We think the Metaverse relies on six foundational pillars.

首先也是最重要的是社会属性。首先,Metaverse是一个社交场所,玩家能够通过互动关系进行互动,这对现实生活中的社交而言是一种补充甚至是替代。

The first one being socialization. First and foremost the Metaverse is a social hub where players have the opportunity to interact through engaging relationships, which complement or even replace real life socialization.

第二是持续化。因为在玩家断开连接后,这个虚拟世界仍在持续运作,它不依赖于玩家而存在,能在没有玩家的情况下存续下去。

Second pillar is persistency. Because the Metaverse keeps on going after the players disconnect, it does not rely on the player's presence and keeps living on without him.

第三是UGC创作,以及数字内容生产。在Metaverse中,由于易获得和易操作的工具的存在,玩家有能力与这个虚拟世界进行互动,并参与创建工作。也就是说,Metaverse实际上模糊了创作者和玩家之间的界限。

Third pillar is user-generated content and digital content creation in general. In particular because in the Metaverse, players should be able to interact and contribute to the digital universe. Thanks to easy accessible tools. The Metaverse actually blurs the line between the creators and the players.

第四是媒体融合。Metaverse也是一个不同种类媒体的共生共存之地。在这里,玩家可以得到充分的跨媒体体验,比如绘画、艺术、电影等等。玩家可以拥有从艺术、音乐到电影的跨媒体体验。

4th pillar is the convergence of media. The Metaverse is also a place where different media industries can coexist and players are invited to live cross media experiences around art, music, or movies.

第五是自有经济体系。因为在Metaverse中,玩家应该能够挣钱,或者能获得被系统认可和被其他玩家重视的技能。我认为在这一方面上,区块链和NFT等技术将会发挥重要作用。

5th pillar is an integrated and functional economy. In the Metaverse, players should have the opportunity to earn money or to acquire skills that are recognized by the system and valued by other players. This is in particular where technologies like blockchain and NFT can play a big role.

最后是可扩展性。只有拥有了技术的可扩展性,Metaverse才能把大量的玩家聚集在一个服务器上共享生活,而不是让玩家分散在多个服务器上各自为营。

Finally, scalability, because the Metaverse depends on the scalability of the technology to allow a great number of players to congregate on a single server and share moments together instead of playing on multiple servers.

AI将发挥哪些作用?在这里,我说的不是游戏机器人AI,而是指机器学习和深度学习技术。

Now, when looking at this big picture, you may wonder where does Artificial Intelligence fits? Here I'm not talking about AI in the sense of game bots, but of course about machine learning and deep learning techniques.

当我们谈到AI,我们会把它比喻为电力,因为它的作用将是颠覆性的。今天,我的演讲重点是数字内容创作。

When we think about it, AI is a little bit like electricity. It actually has the potential to revolutionize all of these domains. But in today's presentation, I'd like to focus on the digital content creation.

尤其是在Vtuber和内容创作者身上,我们看到了这个趋势,他们在为Metaverse作准备。他们购置更昂贵的硬件。你可以在这里的图片上动作捕捉服装、头显等等。他们创建自己的虚拟角色,另一个自我。

We see, in particular, this big trend going on about Vtubers and content creators. They are preparing themselves for the Metaverse. They equip themselves with relatively expensive hardware. You can see on the pictures here motion captures suits, headsets and so on, and they create their own digital avatar, their alter ego.

但要做到这一点,除了硬件投资之外,3D和动画的技能也很重要。尤其是面部动画是一个挑战。

To achieve that, beyond the hardware investment, it is also still very demanding in terms of skills in 3D and animations. Facial animation in particular is a Challenge.

对于一个虚拟化身来说,要想看起来好,要想看起来逼真,它需要在语言、所承载的情感和面部动画,包括眼睛、眉毛、嘴唇等之间实现完美的匹配。

For a virtual avatar to look good, to look realistic, it needs a perfect match between the speech, the emotion it carries and the animation of the face, including the eyes, the eyebrows, lips, and so on.

在电子游戏的背景下,面部动画实际上是相当昂贵的,特别是当你将游戏本地化为不同的语言时。在我们的案例中,我们支持在9到10种语言的本地化。相同的内容用不同语言来说,时长也不一样。

In the context of video game, facial animation can also be pretty expensive. In particular, when you localize games into different languages, in our case, in Ubisoft we localize voice in our games in 9 to 10 languages. And different languages speech have different duration.

例如,德语经常有很长的句子和单词,相比之下,英语要更短一些,因此相对应的唇部动作也不一样。虚拟人物必须根据语言特点制作面部动画。手动制作这些面面部动画,成本就会很高。

For example, German is famous for having very long sentences and words, and so you need the animation of the mouth to be perfectly in sync with the speech. In comparison, English would be shorter, and so the virtual character has to adapt to those different languages. Creating those facial animations manually would be expensive.

针对这一情况,在育碧有个名为La Forge的团队一直在研究这个问题的解决方案。他们在语音数据的基础上训练神经网络,该网络接收包含对话的音频文件,并输出一个序列的音素。

So our teams in Ubisoft, in particular, Ubisoft La Forge has been working on a solution for this problem. They trained a convolutional neural network based on speech data, the network takes as input an audio file, which contains dialogue lines and outputs a sequence of phonemes.

对于不深究修辞学的人来说,音素就是声音单位,每个音素有着相对应的口型。

For people who are not into linguistics, phonemes are actually units of sounds to which we can map a shape of the mouth.

然后,这个序列的音素被转化成嘴唇和嘴巴的动画,对于懂行的人来说,就是所谓的f-曲线。

And then this sequence of phonemes is converted into lips and mouth animation also known as f-curves for people who are familiar with the domain.

我们刚才说了面部,那身体呢?现在在学术界有一个很大的、非常热门的研究课题,我们称之为 "pose estimation(人体姿态估计)"。这项研究试图根据二维图像或视频生成人体的三维坐标,即骨骼的不同关节。这是一个非常困难的研究课题。德国Max Planck研究所曾发表了一篇非常先进的论文。

We talked about face, but what about the body? There is this very hot research topic happening right now in the academia, which we call pose estimation. It's a research which consists in trying to generate 3D coordinates of the human body, the different joints of the skeleton, based on 2D images or videos. What you see here is actually not from Ubisoft. It's a state of the art paper published by the Max Planck Institute in Germany.

他们不仅开发出一种技术来预测身体的坐标和三维模型,而且试图预测生成网格和身体的形状,我们把它称为身体姿势和形态估计。这种研究对于像我们这样的游戏行业团队来说是非常有启发的。

In their case, not only they developed a technique to predict the coordinates of the body, but also the 3D model. We call it a body pose and shape estimation. This kind of research is very inspiring for teams like us in the video game industry.

于我们而言,在育碧中国,我们从事大量的野生动物研发,多年来潜心研发《孤岛惊魂》系列,打造了其中非常知名的动物角色。对人类进行动补是一项挑战,更不要说野生动物,什么熊、大象或是老虎。因此,我们决定充分利用团队之前的工作成果。

But in our case, in Ubisoft China, we work a lot on animals, on the wildlife. We have a long history working on the Far Cry brand for which we develop its most iconic animals. The research I was mentioning on humans is already challenging because it is difficult to acquire motion, capture data for humans. But think about wild animals like a bear, an elephant or a tiger. It's even more difficult to acquire such data to train an AI. So the idea we had was to actually leverage previous work done by our teams.

在过去八年里,我们的动画师都是手K动物动画的,管叫关键帧动画。我们用它来生成训练数据,来获得人类动补差不多的一个数据。这做法解释起来就是建立一个从输入2D视频得到动物骨骼三维坐标的生产管线。

Over the last 8 years. They created many animals animations manually. We call it key frame animation. We use it to generate training data for achieving similar results as what you saw on humans. The idea is to build a pipeline that takes as input a video and a template skeleton and then generate as an output, the 3D coordinate of the animal skeleton.

我们最终成功建立了生产管线,将实现工具成为Zoobuilder。你可以看到这里的不同组件。输入2D视频,它将视频转换为一连串的图像,并将这些图像提供给第一个机器学习模型,该模型负责定位图像上的动物。

We eventually built that pipeline which we call ZooBuilder. You can see the different components here. It takes as input a video. It converts that video to a sequence of images and provide those images to a first machine learning model that locates the animal on the image.

然后,我们将裁剪过的图像序列提供给第二个机器学习模型,这个模型是用我们的人工动物动画数据反复训练过的。

Then we provide this sequence of cropped images to a second machine learning model that we retrained with our synthetic data, with our animal animation data.

第二个机器学习模型输出图像上升级的2D坐标,我们把这些2D坐标提供给第三个机器学习模型,它负责把2D坐标转换为3D。最后,3D坐标被应用在一个3D模型上。

This second model outputs the 2D coordinates of the skeleton on the image. We then provide those 2D coordinates to a third model, which converts these two 2D coordinates to 3D. Finally, the 3D coordinates are applied on a 3D model.

结果很不错,但是还没有投入生产,只是停留在研发阶段。因为动画效果仍然有一点不完美,而且要让它也能应用于其它动物,还是一项挑战。

It is showing promising results but to be completely transparent, it is not used in production yet. It is still very much a research topic, because the animation is still a little bit imperfect, and it's also a challenge to make it scale for a lot of different animals.

但是这种技术绝对可以帮助创造更多的基于2D视频的动画片段,而不是使用动作捕捉基础设施或动作捕捉硬件,无论是动物还是人类。

But this kind of techniques can definitely help to create more animation clips based on 2D videos, rather than using motion capture infrastructure or motion capture hardware, whether it be for animals or humans.

解决了这些问题以后,我们仍然面临很多挑战。为了在Metaverse中的制作虚拟角色,我们还只是走在半道上。这些动画需要被整合,需要被组合在一起。因此,我想再谈一谈动画合成。

Now creating those animation clips, is actually just half way through animating virtual characters in the Metaverse. Those animations need to be integrated, need to be combined together. This is why I want to talk a bit about animation blending.

我先解释一下在传统方式下是如何做的。一个动画师通常会开发一个我们称之为动画图或动画树的东西,它由不同的动画片段、不同的叶子组成。根据玩家的输入或自动输入,虚拟角色将在该图中移动并播放这些动画。

I'll explain first how it's done in the traditional way. An animator would usually develop what we call an animation graph or an animation tree, which is composed of different leaves corresponding to different clips of animation. Based on players input or based on automated input, the virtual character is activating animations through that graph and play those animations.

为了使它看起来漂亮流畅,有一个要求,即第一个动画片段的最后一帧动画,应该与第二个动画片段的第一帧动画相匹配。因此,行走周期的结束应该与绕行的开始或跳跃动画的开始相匹配。如果不匹配的话,动画看起来就会有一点不连贯或不自然。

For this to look nice, there is a requirement, which is the last animation frame of the first animation clip, should match with the first animation frame of the second animation clips. So the end of the walk cycle should match with the beginning of the run cycle or the beginning of the jump animation. In case it doesn't match, the animation will look a little bit jittery and unnatural.

下面是一个例子(播放动画)。正如你所看到的,这个动画有一点不连贯。它不时地跳动,破坏了沉浸感。

Here is an example how it looks. You have this character walking on a plane. As you can see, the animation is a little bit jittery. It is jumping from time to time, which is breaking the immersion.

解决这个问题的技术是添加更多的动画,过渡动画来完成动画的空隙。但这种技术会变得非常复杂,非常难以管理。它的复杂性在不断增加。你加的动画越多,难度越大。

A technic to solve this is to add more animations, transition animations to complete the gaps between animation clips. But this kind of technique can become very complex and difficult to manage. The more animations you add, the more difficult it is to maintain the graph.

所以解决这个问题的技术方案就是我们所说的动作匹配。它会把所有这些动画片段,放在一个内存数据库中。之后,你可以通过一个搜索算法,根据玩家的输入,如角色、脚在地面上的位置、轨迹来搜索最佳匹配的动画帧,然后将该帧提供给游戏引擎。它的工作效果相当好。

Another technic to solve this challenge is what we call motion matching. It consists in taking all those animation clips, putting them all in a memory database, and then you have a search algorithm, which based on players input, such as the characters’feet position, the trajectory is going to search for the best matching animation frame, and then provide the frame to the game engine. This works fairly well.

(播放动画)这是与之前相同的例子,但有了动作匹配,你可以看到角色动画更流畅了。这里的挑战是,数据库里动画和工作绩效是成正比的。如果你想要更丰富的动作,你就需要更多的动画,然后内存和计算成本就会变高。

This is the same example as before, but with motion matching activated. You can see the character animation is a lot smoother. The challenge here is this kind of technic scales linearly as you add more animation in the database. If you want more diversity, you need to add more animation which increase the memory and compute requirements.

我们的团队在育碧软件中采用了一种新的技术手段,灵感来源于运动匹配,我们称之为学习运动匹配。在这里我们实际上用一个神经网络取代了搜索,这个神经网络已经被训练过,可以根据角色的站位和其它输入来输出这些动画帧。

Our teams in Ubisoft worked on a new approach inspired from motion matching, which we called learned motion matching, where we actually replaced the search by a neural network, which has been trained to output those animation frames based on the characters’feet position and other inputs.

这种技术的好处是它的效果和传统的运动匹配一样好。你可以看一下这两者的对比(播放视频),右边是传统的运动匹配,左边是学习运动匹配,动画的质量被保留了下来。你可以看到它是相当顺畅的。相比之下,学习运动匹配在内存方面的要求要低得多,几乎是传统方法的十分之一。

Good thing with this technic is that it works as good as the traditional motion matching. Here is a comparison where you see on the right side, traditional motion matching and on the left side, the learned motion matching. You can see the quality of the animation is preserved and the animation is quite smooth. But in comparison, the learned motion matching is a lot less demanding in terms of memory, almost 10 times less demanding than the traditional method.

好消息是,它处理动物的效果一样好(播放动画)。你可以看到一只熊在凹凸的地形上走路。这个动画是用学习机器匹配生成的,它动作流畅,育碧中国团队的使用体验很好。

The good news is it works as well for animals, for quadrupeds. Here you see a bear walking on an uneven terrain. The animation has been generated with learned motion matching and it is perfectly smooth, which is great for our use case in China.

这就是我想分享的与动画有关的挑战和人工智能的应用。最后做个总结。

That's all for the challenges and the applications of AI I wanted to share related to animations. Let's try to wrap it up together.

首先,我们了解到了一个自动生产管线,可以根据语音中生成嘴唇动画。很明显,这比用视频和头显等设备进行面部捕捉更方便。第二,我们看到了用视频生成动画的新兴技术,同样也是为了摆脱所有那些昂贵的硬件。最后,学习动作匹配,用机器学习来改进现有的动画编程和动画合成。

First, we saw an automatic pipeline that generates lips animation out of speech. Obviously, this could be a lot more convenient than a facial capture with videos, headsets and so on.

Second, we saw emerging technologies and promising techniques to generate those animations out of videos. Again, to get rid of all those expensive hardware.

Finally, we introduced learned motion matching, which is using machine learning to improve on existing animation programming and animation blending technics.

坦率地说,在动画领域,我们用机器学习来做的所有事情,实际上只是一个开始。但通过把这三个元素放在一起,我们开始看到虚拟角色动画生产管线的未来,它的性能越来越好,也越来越便捷。长远来看,这将简化开发者的工作,让玩家从中获益。

To be frank, we are actually just scratching the surface of everything that could be done in the field of animation with machine learning. But by putting those three elements together, we start to see the future of the animation pipeline for virtual characters, which is becoming more and more performant, more and more accessible. And that is going to streamline the work of artist and hopefully players in the long run.

我的演讲到此结束。感谢你的聆听。

This is it for my presentation. Thank you for listening.